LLMs in Autonomous Driving — Part 4

Let’s continue with two important papers from Google:

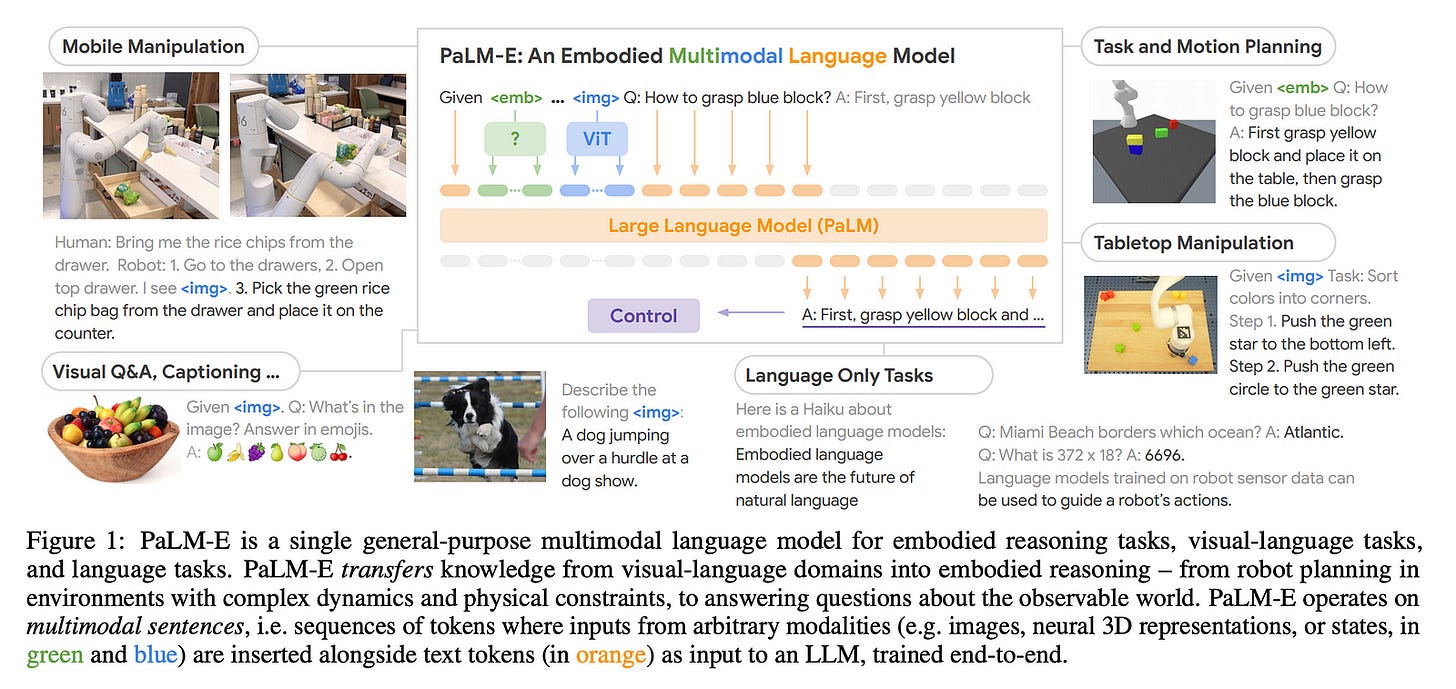

PaLM-E: An Embodied Multimodal Language Model

The core concept behind PaLM-E is to directly integrate real-time observations (like images, state estimates, and sensor data) into the same representational space used by a pre-trained language model (LLM). These observations are encoded as sequences of ve…

Keep reading with a 7-day free trial

Subscribe to Isaac’s Newsletter to keep reading this post and get 7 days of free access to the full post archives.